Artificial intelligence is transforming modern software, powering everything from personalised recommendations to advanced automation.

But here is the thing: with great power comes great responsibility. And as powerful as AI is, it doesn’t operate in a vacuum. The more we rely on data to train and run these systems, the more important it becomes to handle that data responsibly. So, if your AI-powered product handles any personal data, you cannot afford to overlook data privacy.

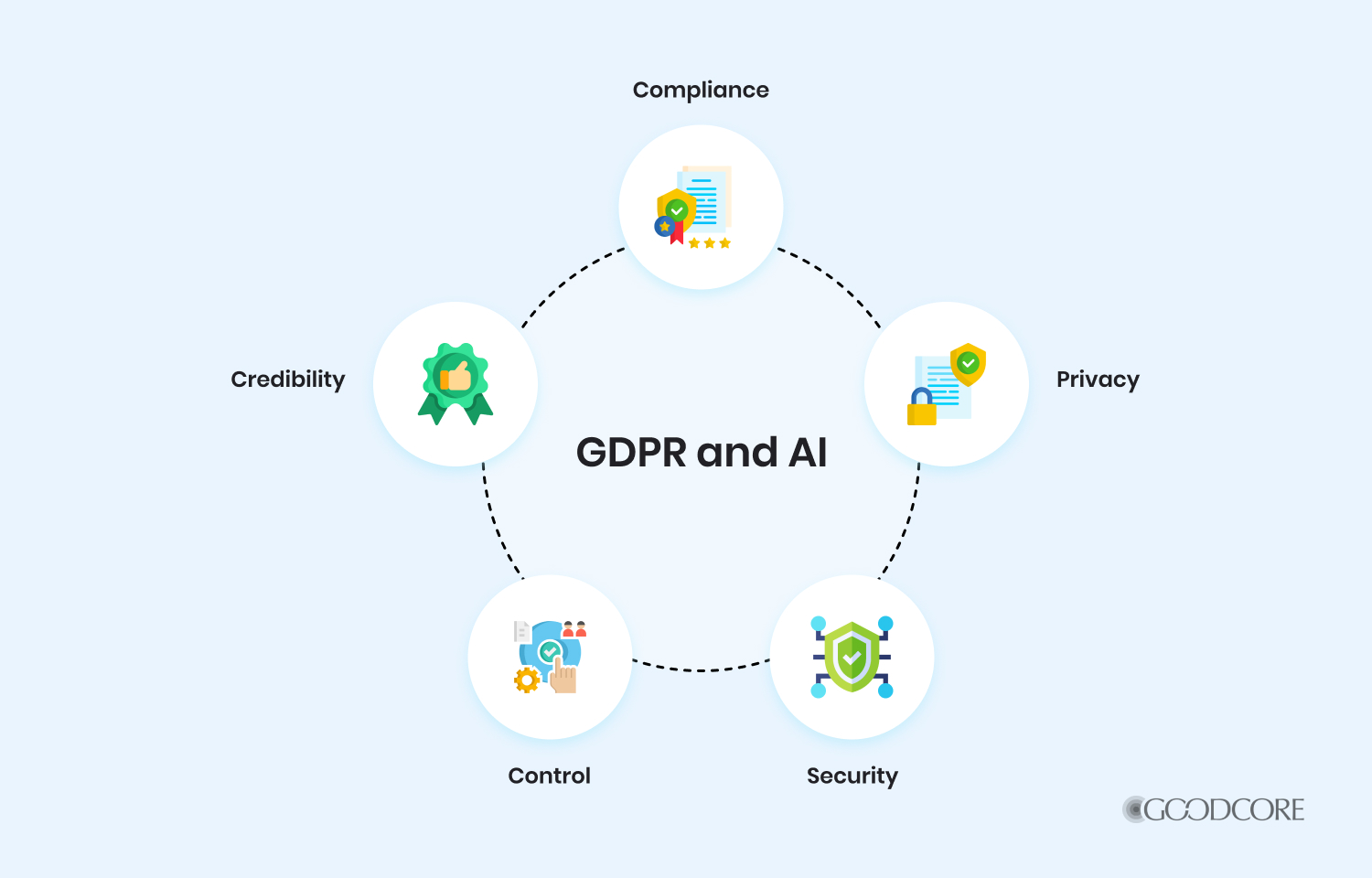

That is where data privacy and regulatory compliance frameworks, like the General Data Protection Regulation, come in. Understanding the relationship between GDPR and AI is very important because it helps build user trust and ensure long-term success.

With the help of our expert AI consultants, we have written this blog to walk you through the key privacy principles, common challenges, and some simple steps to help you stay compliant while making the most out of artificial intelligence in your business.

What is GDPR?

The General Data Protection Regulation (short for GDPR) is a data privacy law that was introduced by the European Union in 2018. This regulatory framework overlooks how companies collect, store, and use personal user data and whether they are doing all this responsibly.

However, GDPR does not just apply to companies based in the EU. It applies to any business that deals with the data of EU citizens, whether you are based in London, Los Angeles or Luxembourg.

For a deeper dive into this topic, check out our detailed guide on the 7 Principles of GDPR.

Why does GDPR matter for AI systems?

AI and the GDPR have a close connection. AI systems often rely on massive datasets, which most likely include personal or sensitive information. The GDPR ensures that these kinds of data are heavily protected, and rightfully so.

So, if your AI tool processes any form of user data, you need to understand and follow the GDPR’s requirements from the very beginning of development, and not just as an afterthought. For example, if you want to train your AI model to make product recommendations on an e-commerce website, it may use past user actions or their profile data to learn and make those recommendations.

That means that if you are handling information that falls squarely under GDPR rules, you will need a lawful basis for processing it, a plan for keeping it secure, and a way to respond to users if they want to access or delete their data.

Worried about GDPR and data privacy in AI projects?

We’ll help you design AI models and data pipelines that fully comply with GDPR and other privacy regulations.

AI consulting services

Key GDPR concepts that are relevant to AI

Once you understand the core principles of GDPR, it becomes much easier to design AI solutions that are both innovative and compliant.

In this section, we will walk you through the key GDPR concepts that specifically affect how AI products are built and used.

Personal data and special categories

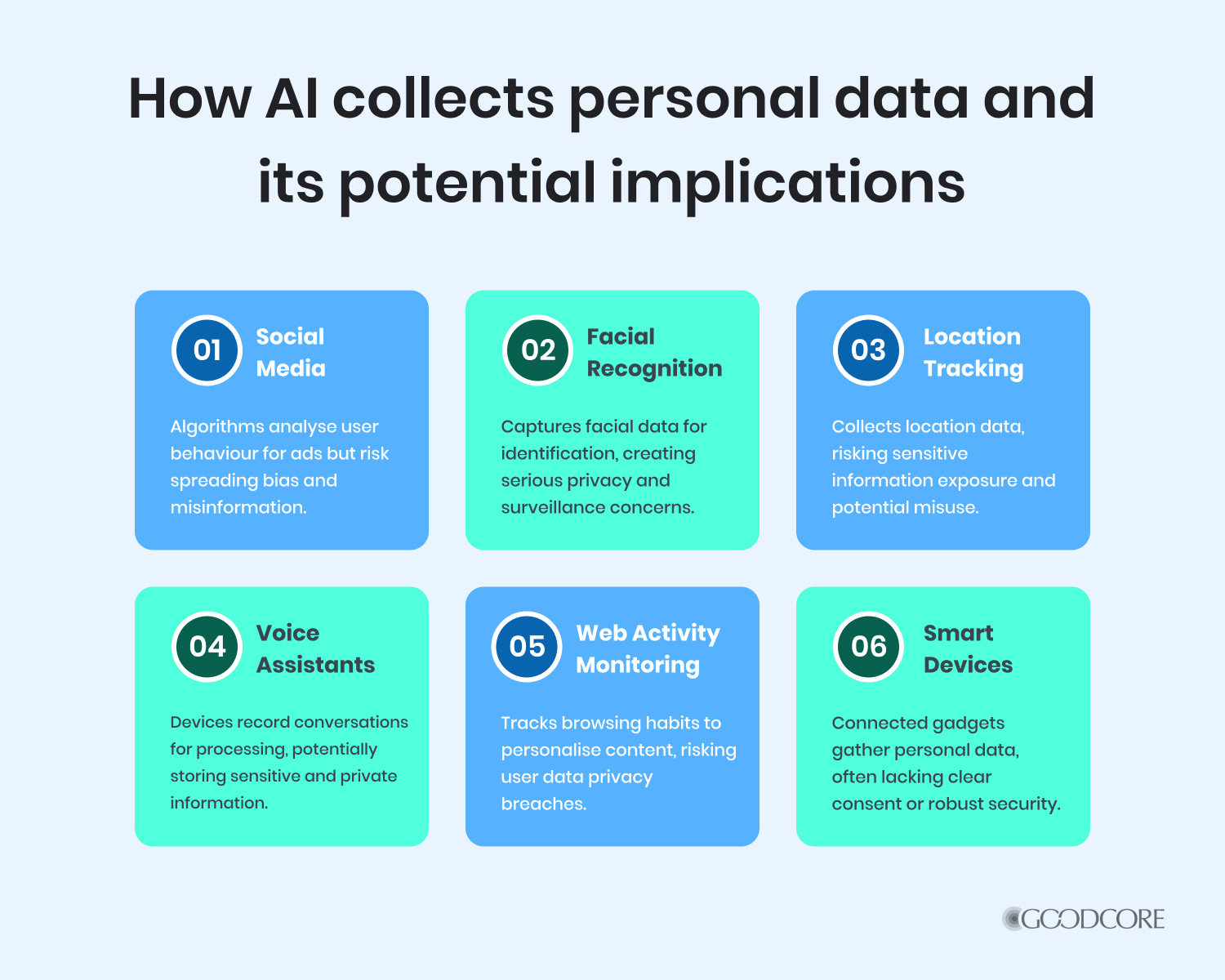

Personal data means anything that can directly or indirectly identify someone, such as user names, email addresses, device IDs, voice recordings, purchase history and user behaviour.

One step further, there is the special category data that includes sensitive information like health records, genetic data, biometrics, religious beliefs, or racial origin.

Both these types of data, especially the second one, are subject to stricter rules and require extra precautions.

Lawful basis for processing

GDPR says that you need a valid reason to process someone’s data. These reasons are called “lawful basis.” Picking the right lawful basis is important, and you will need to document it properly.

Here are the most common ones you might come across when working with AI:

- Consent: The user has agreed on how you will use their data. It has to be specific and easy to withdraw at any time.

- Contract: You are using their data to deliver something they signed up for, like providing a service or fulfilling an order.

- Legal obligation: You have to use the data because the law says so. (This one does not necessarily apply to AI use cases)

- Legitimate interest: You are using the data that your users understand and expect you to use in your business. However, it cannot override their rights.

Automated decision-making and profiling

This core principle is quite interesting, but can be a bit tricky as well. It comes under Article 22, which is a specific part of the General Data Protection Regulation that deals with automated decision-making and profiling.

Here is what the article says in simple terms:

People have the right not to be subject to decisions that are made entirely by automated systems, especially if those decisions have legal or significant effects on individuals (like loan approvals or filtering job applicants after final interviews).

Unless you meet specific exceptions or have that individual’s explicit consent. So, if your AI system is making decisions without any human involvement, you need to make sure that:

- It is allowed by law,

- You have obtained explicit consent, or

- A real person is involved in reviewing the AI’s decision before it is final.

If you are confused as to which model would best suit your business needs, you can find out more on our blog: How To Choose The Right AI Model For Your Project.

Data subject rights

Under the GDPR, people (also referred to as data subjects) have rights, and your AI system should respect them. To ensure their rights are being met, you should be able to answer these 5 fundamental questions:

- What data do you have on them?

- Can they correct inaccurate info?

- Can they delete their data?

- Can they get their data in a portable format?

- Do they have the right to object to how their data is being used?

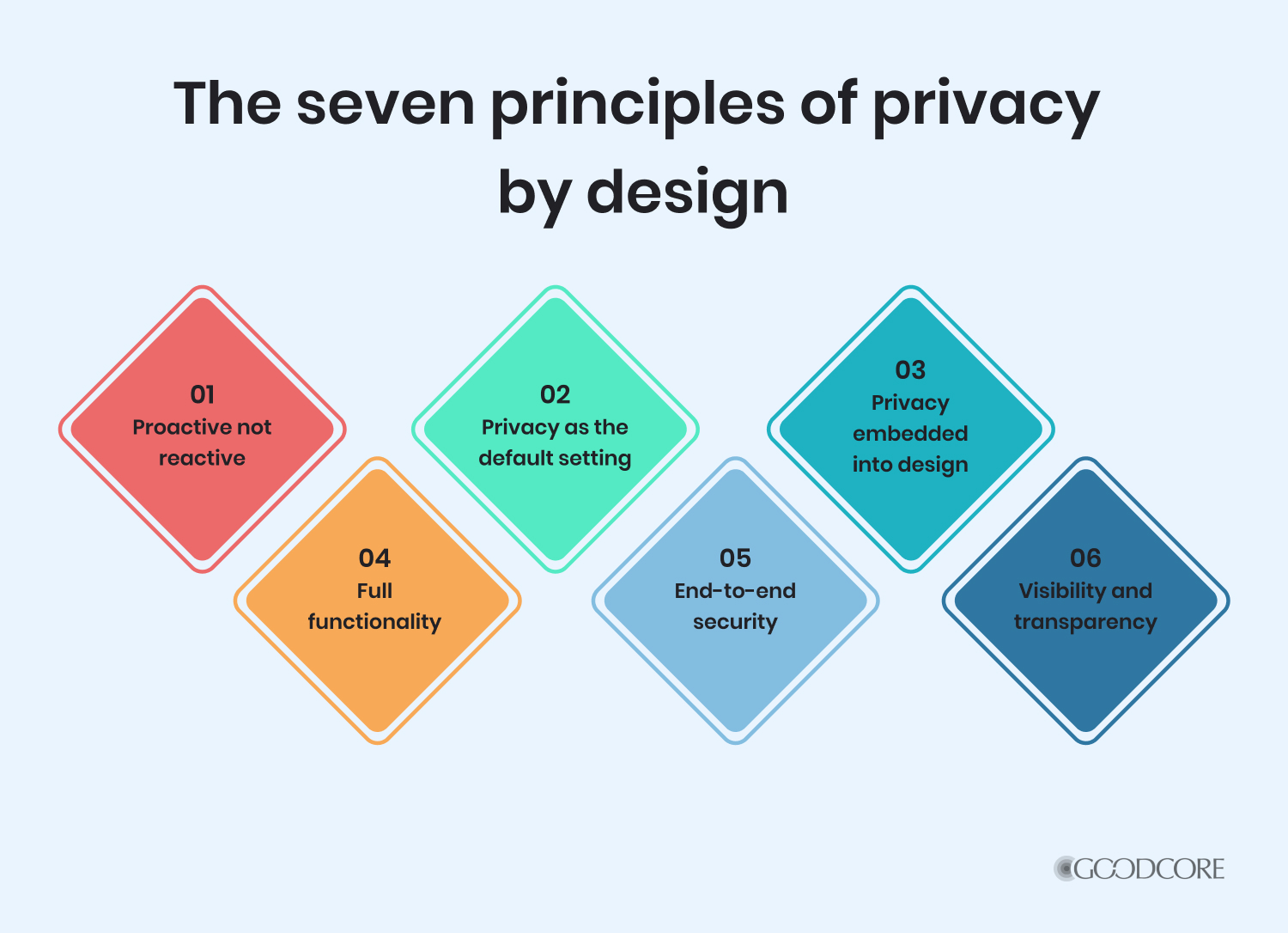

Privacy by design and by default

User data privacy is something of utmost importance, especially if you want to build and maintain long-term trust with your customers. This includes two things.

One: You need to build it into your product from day one. This is what privacy by design means. You proactively integrate privacy features into the software architecture, data flows, and even the user interface.

Two: Privacy by default, which means the strictest privacy settings should be the default; users should not have to dig around to protect their rights.

Common GDPR challenges in AI development

While AI and the GDPR are interrelated, there are some challenges that developers often run into. We have briefly listed some of these below:

Difficulty in ensuring transparency (AI explainability)

One of the biggest hurdles with AI is that it does not always explain how it arrives at a decision. Deep learning models, in particular, are often referred to as “black boxes” because their internal workings can be difficult to understand, even for the people who built them.

But under GDPR, your system needs to offer some level of transparency or interpretability, as the users have the right to receive a meaningful explanation if an automated decision affects them.

Managing consent and lawful basis for data processing

When you are building or training an AI model, getting user consent can be a complex task. You need to make sure that the consent is informed, specific, and freely given. On top of that, datasets are often reused for multiple purposes, like training, testing, and ongoing optimisation, which might go beyond what the user originally agreed to.

Deciding between consent and other lawful bases (like legitimate interest) becomes even more important as your AI system evolves.

Risks with data retention and storage

AI systems often rely on historical data to improve performance over time. The problem is, GDPR requires you to only keep personal data for as long as you truly need it. Storing too much data for longer than necessary can put you in violation of the rules.

So, you will need a clear data retention policy and technical processes to regularly delete or anonymise unused data, without hurting the performance of your model.

Handling sensitive data and automated decisions

Like we mentioned earlier, sensitive information like biometric or health-related data can make your company subject to stricter compliance needs. These types of data require a higher standard of care and often, explicit user consent.

Furthermore, if your system makes automated decisions based on this data, you must ensure human involvement, explainability, and the ability for users to contest the outcome.

Cross-border data transfer complexities

Many AI systems and cloud services store or process data across different countries. If any of that data ends up outside the EU, you will need to comply with GDPR’s rules for international data transfers.

This often involves using tools like Standard Contractual Clauses (SCCs) or choosing service providers who store data in GDPR-compliant regions. Failing to do this properly can expose your business to major legal and financial risks.

Practical steps to ensure GDPR compliance in AI

Let’s now understand what you can do to stay GDPR-compliant without overwhelming your development team.

1. Data mapping and inventory

You can start by identifying and answering these key points:

- What data you are collecting

- Where it comes from

- Where it is stored and processed

- Who has access to it

- How it is used in your AI system

2. Choose the right lawful basis

You will need to carefully analyse which lawful basis suits your AI system or tool.

If you are unsure whether to go with consent or legitimate interest, here is a good rule of thumb:

- Use consent when data is sensitive or the processing might surprise users.

- Use legitimate interest if it is a standard business operation and you have done a proper impact assessment.

3. Implement privacy by design

As we said above, it is important that you implement the necessary privacy features during the design phase, not after deployment. Make sure:

- Only necessary data is collected

- Data is stored securely

- Users have some kind of control over their privacy

4. Use anonymisation or pseudonymisation

- Anonymise: remove all personal identifiers from data so that a person cannot be identified at all, not even with extra effort.

- Pseudonymise: replace personal details, like names or emails, with fake identifiers or codes, but the data can still be traced back to a person if needed.

Where possible, anonymise or pseudonymise your data so that the users cannot be easily identified. This helps reduce legal risks and is encouraged under GDPR.

5. Make AI decisions explainable

Even if your algorithm is complex, there are ways to make its outputs understandable. You can use techniques like LIME or SHAP for feature importance, confidence scores or risk levels and visual explanations for user decisions.

6. Prepare for data subject requests

You should have a system in place to handle:

- User data access requests

- Data deletion or correction

- Provides users with an option to opt out or object

If your AI product cannot support these functions, then that means that your business is not ready for operating in a GDPR-regulated environment.

Data privacy is a valuable asset for your company

Building AI-powered products is exciting. You are doing cutting-edge work, making your business operations scale faster, and revolutionising how people interact with technology. But handling such large amounts of user data also comes with responsibility.

So, if you are starting your AI development journey, keep data privacy at the heart of your product. Build it into your architecture, your UX, and your team’s mindset from the start.

Ensure compliance without slowing innovation

Our AI consulting team ensures your solution is privacy-compliant, secure, and built with user trust in mind.

AI consulting services

FAQs

What happens if my AI system violates the GDPR rules?

For serious breaches, like violating core principles of data protection, organisations can face fines of up to €20 million or 4% of their global annual revenue from the previous financial year – whichever is higher. Less serious infringements, such as violations related to data processing obligations, can result in fines of up to €10 million or 2% of global annual revenue.

Can I train AI models on anonymised data to avoid GDPR?

Yes, you can. Under GDPR, truly anonymised data is not considered personal data, which means the regulatory framework will no longer apply to it. But you will need to make sure that it is truly anonymised, not just pseudonymised, because if re-identification is possible, GDPR will still apply to your product or business operation.

Can I use publicly available data to train my AI system under GDPR?

Not always. Just because data is publicly accessible (e.g. on social media) does not necessarily mean that it is free from GDPR protection laws. If the data identifies a person, you still need a lawful basis for processing it, even if it was posted publicly.